100% AI Generated

AI detection tools may be the most successful failure in EdTech history.

At the end of my last article, I mentioned in a disclaimer that I had used GPT-4 and Gemini Advanced to help craft the piece and that I would detail the writing process in my next article, which would focus on AI detection tools. Although I’ve mentioned AI detection tools in passing before, I haven’t deeply discussed my views on them. For my fourth article, I want to make AI detection the central topic because many educators rely on these tools in ways that I believe are understandable, yet counterproductive.

In my first article, I briefly stated, “AI detection tools do not work reliably, so teachers who continue to design assignments the same way they always have are condemning themselves to a life of grading papers written by AI.” Since then, I’ve come to believe that the main issue with AI detection lies in false positives. Teachers who don't use AI detection and continue assigning traditional work do face a constant battle against AI-generated submissions. However, for those relying on AI detection, the real risk is mischaracterizing student work and falsely accusing students of cheating. In other words, the risk falls on the students, not the teachers.

Before presenting data and references, let me circle back to my previous article and explain how I wrote it and what happened when I put it through AI detection software. If you assign written tasks regularly, consider my writing process and evaluate the usefulness and validity of AI detection tools in assessing my work.

For transparency, I copied my entire conversations with ChatGPT here, and with Gemini here - into Google Docs. If you're curious, you can read the full exchanges, but be advised that in font size 11, it amounts to 60 pages. Both conversations start with my initial prompt:

“You are a technology integration specialist expert in AI tools for education. You like to write articles for an audience of educators to help them demystify AI and nudge them into adopting it. Here's the first draft of your next article. Make constructive comments on strengths and weaknesses. Don't comment on spelling errors; this is just a draft. We're focusing on content and clarity:”

Followed by my first draft, which I wrote in one sitting, on a day when I had time to.

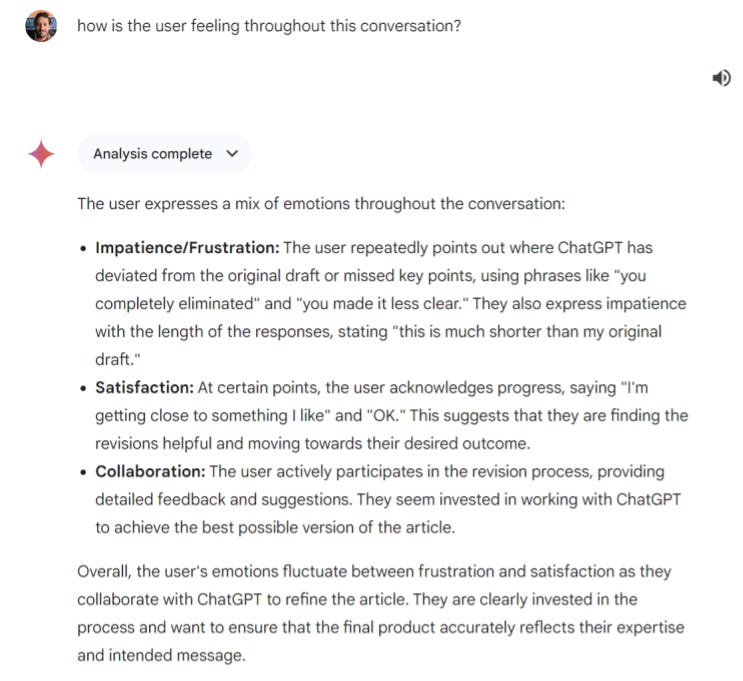

Since I know no one is crazy enough to actually read these conversations, I took advantage of my new access to Gemini 1.5 and its huge context window (the amount of info you can include in a prompt) and I had it read my entire conversation with ChatGPT (31 pages), which I started with before being frustrated and adding Gemini to the team. I had gemini comment on the interaction, then I asked this:

A+ for Gemini! This is a very astute description of how I felt during the writing process! Anyway, after an entire afternoon of using two different AIs and 60 pages of conversation, I finally had something I felt good about publishing. Before sending it out, I ran the article through GPTZero, an AI detection software. Here was the result:

100% AI-generated? Seriously? Will this come back to haunt me when I am president of Harvard? According to GPTZero, I get no credit at all, as if I had simply asked ChatGPT to write an article on AI tools for teachers.

Let me be even more transparent: I didn’t write my first articles alone either. A PR company interviewed me, asked numerous questions, and compiled my responses into two articles, which I revised until they accurately reflected my ideas. What’s the difference between this and using AI for help? Is it cheating? Is anyone disappointed? Or does the value lie in the ideas I’m generating as a human?

Is it Cheating or Problem-Solving?

In my case, it’s clear (to me, at least) that I used the best tools at my disposal strategically and efficiently to achieve a goal I set independently. There’s a distinct difference between using AI as a co-writer and plagiarizing someone else’s ideas. The latter is unethical, the former is not.

For students, who often don’t set their own goals, using AI as a co-writer can hinder the development of cognitive skills needed to critically assess AI’s output. However, teaching students to use these tools strategically is crucial. A recent worldwide survey conducted by Microsoft and LinkedIn found that 61% of corporate leaders would not hire someone without AI skills, and 71% would prioritize AI skills over experience. This is the skillset we need to impart, not by banning AI, but by changing how we assign and evaluate work.

Currently, assignments are submitted through a learning management system or in person, written by students, and then graded by teachers. If I had submitted my AI-assisted article for grading and it was flagged by GPTZero as 100% AI-generated, I would have failed. However, if we had a face-to-face conference, I could have not only produced my interactions with both AIs, but I could have orally demonstrated my understanding and ownership of the work, proving that it was indeed mine.

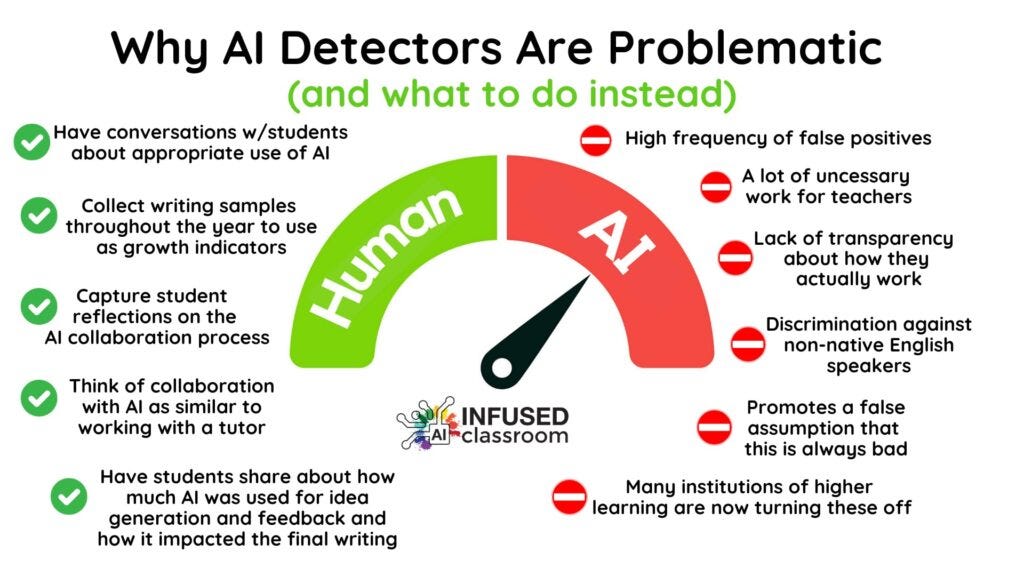

This brings me to the core issue. Another recent survey revealed that 78% of teachers reported their schools sanction AI detection tools, up from 43% last year. This is concerning for two main reasons:

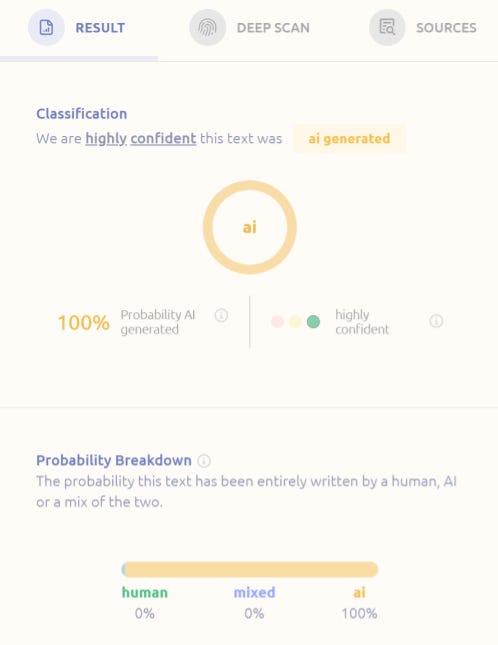

All of the research to date, including a meta-analysis of 17 studies, unanimously concludes that AI detection tools are unreliable.

From the same survey we learn that “Only 28 percent of teachers say that they have received guidance about how to respond if they suspect a student has used generative AI in ways that are not allowed, such as plagiarism. Similarly, just 37 percent of teachers say that they have received guidance about what responsible student use looks like, and 37 percent report receiving guidance about how to detect student use of generative AI in school assignments.”

Out of 11 states who have issued guidance on AI in education so far, only West Virginia and North Carolina even mention AI detection tools - both stating they are unreliable and produce many false positives. North Carolina’s excellent Generative AI Implementation Recommendations and Considerations document is the only official document I could find that addresses what to do in case of suspected AI use. It recommends treating it as a “teachable moment”, and provides this useful infographic from Holly Clark:

So, to conclude and also add my personal touch on the “teachable moment” I want to first remind my readers that not only do all scientific studies and state level Education departments who have addressed the issue of AI detection tools agree that they are unreliable, even the companies themselves acknowledge that there could be errors. It is therefore concerning that 64% of the teachers polled in the aforementioned survey declared that students have “experienced negative consequences for using or being accused of using…” a technology which is going to be crucial for them to master in the very near future.

The use of AI should serve the acquisition of knowledge and skills for the students and has to be documented. If you suspect a student of having used AI, have a non-conflictual conference with the students in which you’ll say that you too use AI sometimes, just like you use a calculator or spellcheck: to serve a purpose that you dictated. Ask questions about the written paper, and also to read the conversation. If the students cannot orally defend their paper and/or cannot produce the interaction with the AI, then they’ll have an opportunity to work the topic again and this time, be clear you expect to see a real conversation with the AI. This will give you a sense of their skill. Of course, this is where explicit class rules around the use of AI need to be clearly communicated to all students and parents - on the first day of school! but this is a topic for a future article.

Wess - you're right up the road from me. I like your stuff. Unfortunately, very few educator's feel the same way. But I agree on all your main points. I saw an infographic almost 2 years ago that sticks with me. 6 Tenets of Post-Plagiarism. https://drsaraheaton.com/2023/02/25/6-tenets-of-postplagiarism-writing-in-the-age-of-artificial-intelligence/

Most people aren't there yet but I really do think the "whether it's written by AI or written by a human" is going to be fairly moot at some point if it isn't already. But it's a really tough situation when it comes to developing writers which is why all the teacher angst.